Year: 2013 Vol. 79 Ed. 3 - (12º)

Artigo Original

Pages: 336 to 341

The influence of speech stimuli contrast in cortical auditory evoked potentials

Author(s): Kátia de Freitas Alvarenga1; Leticia Cristina Vicente2; Raquel Caroline Ferreira Lopes2; Rubem Abrão da Silva3; Marcos Roberto Banhara4; Andréa Cintra Lopes5; Lilian Cássia Bornia Jacob-Corteletti5

DOI: 10.5935/1808-8694.20130059

Keywords: audiology; auditory pathways; electrophysiology; event-related potentials, P300; evoked potentials, auditory.

Abstract:

Studies about cortical auditory evoked potentials using the speech stimuli in normal hearing individuals are important for understanding how the complexity of the stimulus influences the characteristics of the cortical potential generated.

OBJECTIVE: To characterize the cortical auditory evoked potential and the P3 auditory cognitive potential with the vocalic and consonantal contrast stimuli in normally hearing individuals.

METHOD: 31 individuals with no risk for hearing, neurologic and language alterations, in the age range between 7 and 30 years, participated in this study. The cortical auditory evoked potentials and the P3 auditory cognitive one were recorded in the Fz and Cz active channels using consonantal (/ba/-/da/) and vocalic (/i/-/a/) speech contrasts. Design: A crosssectional prospective cohort study.

RESULTS: We found a statistically significant difference between the speech contrast used and the latencies of the N2 (p = 0.00) and P3 (p = 0.00) components, as well as between the active channel considered (Fz/Cz) and the P3 latency and amplitude values. These correlations did not occur for the exogenous components N1 and P2.

CONCLUSION: The speech stimulus contrast, vocalic or consonantal, must be taken into account in the analysis of the cortical auditory evoked potential, N2 component, and auditory cognitive P3 potential.

![]()

INTRODUCTION

The study of the P3 auditory cognitive evoked potential, enables the assessment of the neurophysiological cognitive processes which happen in the cerebral cortex, such as memory and auditory attention1. Since this is an objective method, its clinical applicability has been shown in different neurological and mental conditions, alterations in hearing, language, learning and others2-6.

Two auditory stimuli are utilized in the oddball paradigm, one rare and one that is frequent; they have a contrast between each other and are built based on frequency, intensity, meaning or category. Using two recording channels, it is possible to observe the N1, P2 e N2 cortical potentials for the frequent stimuli, and the P3 component for the rare stimulus. The number used to name these components pertains to the order of occurrence in which these potentials are recorded, and the letters are used to characterize positive (P) and negative (N) peaks. It is important to stress that the P3 is considered a cognitive potential different from the others, since it corresponds to the electrical activity which happens in the auditory system when there is discrimination of the rare stimulus among the frequencies.

Studies have characterized the P3 component as to latency and amplitude as it is evoked by pure tones in individuals who can hear. However, the acoustic signal processing happens in a very different way vis-à-vis verbal and non-verbal sounds7-10, and it is very difficult to generalize auditory processing information of a simple stimulus and a more complex one, like speech11.

The P3 cognitive auditory evoked potential generated by speech has been utilized to provide speech signal processing information when the behavioral assessment is not an accurate method, besides helping to pinpoint detection or discrimination alterations, and such information may guide the therapeutic rehabilitation of the individual12.

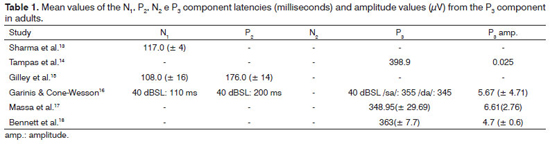

Thus, studies involving auditory evoked potentials with speech stimuli are important in order to understand how the stimulus complexity influences the characteristics of the potential generated, such as latency and amplitude. Table 1 depicts the latency values from the P3 cognitive and cortical auditory evoked potential latency values, as well as the amplitude values as evoked by speech (syllables) stimuli in adults with normal hearing.

The goal of the present paper was to characterize cortical auditory evoked potentials and the P3 cognitive auditory potentials from speech stimulus with vocalic and consonantal contrasts in normal hearing individuals.

METHOD

This is a cross-sectional and prospective study carried out with the approval of the Ethics Committee, process # 069/2003. All the individuals assessed, or their guardians, signed the Informed Consent Form prior to being submitted to the exam.

We assessed 31 normal hearing individuals, without past disorders putting them in risk of developing auditory, neurological and language disorders, within the age range between 7 and 30 years, 13 females and 18 males.

The lack of hearing loss was proven by the auditory threshold of < 25 dBHL upon threshold tonal audiometry, 92% scores for monosyllable words in the speech recognition index (SRI), type A tympanometry curve and acoustic reflex between 70 and 90 dBSL. We used the 622 Madsen audiometer®, with TDH-39 headphones, calibrated in the ANSI-69 standard and the Interacoustics AZ7® immittance audiometer.

During the test, the individuals remained lying down in a gurney, in the dorsal position, and were instructed to keep their eyes as fixed as possible in order to reduce the artifact caused by eye movement. As we identified the rare stimulus among the frequent ones, the individuals were instructed to perform a simple motor action (raise the hand).

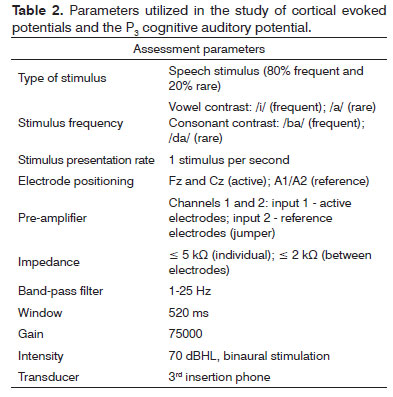

The simultaneous recording of the N1/P2 e N2/P3 complexes in channels Fz and Cz was considered as a criterion to define the presence of cortical auditory evoked potentials and the P3 cognitive auditory potential. We used the Biologic's Evoked Potential System® (EP) with the parameters described on Table 2.

The speech sample was collected in an acoustically treated room inside a lab. The emissions were recorded by means of a unidirectional microphone, directly on the computer board, through the Praat® (www.praat.org) free software, with 22 kHz sampling. We asked the speaker (22 year-old male with a fluid voice quality) to utter the emissions naturally. In the beginning, we worked on the contrast by means of the /ba/-/da/articulation point. By the spectral and temporal definition, the /ba/was setup as a frequent stimulus, and the /da/as the rare one. The [ba] and [da] syllables were taken from uttering the words [ba'ba] and [da'da], respectively, corresponding to the second syllable. From the isolated syllable, we found the F1, F2 and F3 values in their initial and stable portions. With the bandwidth values of the forming frequencies stable regions we compiled a Praat script (version 4.2.31) and we resynthesized each syllable. The duration of the [ba] and [da] syllables was 180 ms. The /i/-/a/meeting of vowels was established by the frequencies from formants F1 and F2 and by a shorter F3 extension. Vowel. [a] and [i] were taken from the isolated utterance of syllables [pa] and [pi], respectively. In each syllable of the vowel region, we collected two glottic cycles with spectral stability, and in the Matlab® (version 6.0.0.88), we replicated these cycles so as to correspond to the 150 ms vowel utterance. The vowels were created in the Praat® with a script similar to what was previously described for the syllables. The linguistic stimuli which were previously produced, handled and recorded in a CD by the Lab were digitalized and inserted in the unit C of the computer connected to the software of the Biologic's Evoked Potential System® (EP). The stimulus order and level of presentation were randomly handled by the aforementioned software.

In order to assess the results, we considered the absolute latency of the cortical auditory evoked potentials, N1, P2 and N2 components and P3 cognitive auditory, as well as the P3 component amplitude, obtained from channels Fz and Cz.

We compared the means among the types of channel and stimuli and the variable factors (amplitude and latency) utilizing a variance analysis model with repeated measures with two factors, ANOVA.

RESULTS

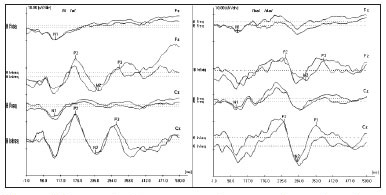

Figure 1 depicts an example of the recording obtained from studying the cortical auditory evoked potential and the P3 cognitive auditory potential in the Fz and Cz channels.

Figure 1. Record obtained in the study of the cortical auditory evoked potential and the P3 auditory evoked potential from a female individual with 29 years of age.

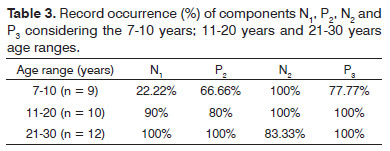

Upon investigating the occurrence of the records from N1, P2, N2 and P3 components, considering sample breaking down into the age ranges: 7-10 years; 11-20 years; 21-30 years, we can see the age influence on the recordings of components N1 and P2 (Table 3).

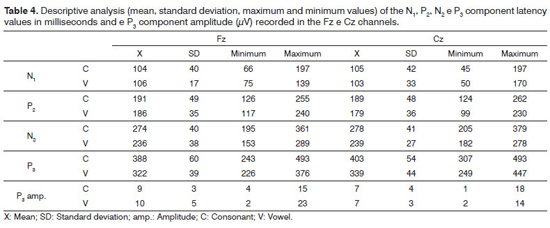

Table 4 depicts the descriptive analysis (mean, standard deviation, maximum and minimum values) of the N1, P2, N2 and P3 component latencies and P3 component amplitude, recorded from channels Fz and Cz, for all the individuals.

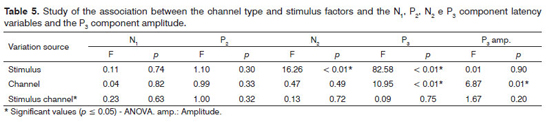

Our analysis of the association between the frequencies of components N1, P2, N2 and P3 and the P3 component amplitude with the type of channel and the stimulus utilized did not show differences for the latency values of components N1 and P2. There was also a difference between the active channels (Fz and Cz) considered in the recording of the P3 component (Table 5).

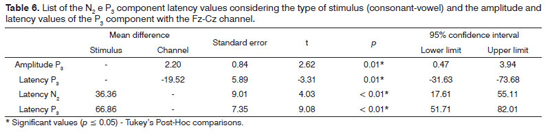

Table 6 depicts the Tukey Post-Hoc comparisons, considering the type of stimulus (consonant-vowel) for the latency of components N2 and P3 and considering the type of channel (Fz-Cz) for the amplitude and latency of the P3 component.

DISCUSSION

In the present investigation, it was possible to obtain the recordings of the cortical auditory evoked potentials and P3 cognitive auditory potential from a speech stimulus, with good reproducibility and morphology, showing that it is a viable procedure to be employed in clinical practice (Figure 1).

Analyzing the occurrence of recording from the N1 and P2 exogenous components, it was possible to notice that their presence increased with age. The N1 component was practically nonexistent in the age range of 7-10 year corroborating the literature which states that, depending on the stimulus presentation characteristics, its recording can only be obtained as of 16 years of age, approximately19. Considering that the P2 component can also be influenced by the age range20, these data show the maturation process of the structures involved in the recording of the cortical auditory evoked potential.

Nonetheless, the age range did not influence the occurrence of recordings in N2 and P3 components, which are more frequently found than the N1 and P2 components in children21. The gender variable was not analyzed, because in a study we did before we showed that there are no significant differences between males and females when we investigate the P3 auditory cognitive potential22.

In investigating the cortical auditory evoked potentials, we noticed that the N1 and P2 exogenous component latencies did not depict significant differences upon considering the Fz/Cz channel and the type of stimulus utilized (/a/-/i/; /ba/-/da/). Nevertheless, for the P3 cognitive auditory potential, the channel type was a factor which influenced its latency and amplitude, as per previously reported in other studies22,23. By the same token, the type of stimulus used was an important variable in the attainment of N2 and P3 components.

The N2 component recording seems to be associated with the identification, processing and attention to the rare stimulus, with a positive correlation between the value of its latency and the level of difficulty in the discrimination task24. In our study, there was an influence of the speech stimulus on the N2 component, with higher latency values for the consonant contrast, suggesting that the degree of difficulty in the discrimination of such contrast is higher than the one found in the meeting of vowels. A similar finding was observed for the P3 component upon comparing verbal and non-verbal stimuli and in situations of difficult discrimination14,17,18,25, reinforcing the hypothesis that this task is more difficult26.

However, this finding can also be explained by the evidence that vowels and consonants are processed in different ways by the central auditory system. One study carried out in rats27 compared discrimination behavioral responses from vowels and consonants with the neural recording from the inferior colliculus and primary auditory cortex, and suggested that consonants and vowels have different representations in the brain. In humans, studies have also reported differences in the activation of central auditory system structures during the discrimination of vowels and consonants28,29. Therefore, the type of speech contrast used may reflect differently on the latency of the N2 and P3 components.

Some studies describe the reduction in the P3 component amplitude with the increase in the task's level of discrimination difficulty14,17,18,25,26. Nonetheless, this correlation was not significant in the present study.

In our series, the normal latency values for the N1, P2, N2 and P3 components for the vowel and consonant contrasts are depicted on Table 4. The comparative discussion between the values found and results from previous studies is inaccurate, because the methodologies are different, and as per shown above, assessment parameters such as type of stimulus utilized, have a significant influence on the latency values of auditory evoked potentials.

Considering that different neural structures are activated during the perception of verbal and non-verbal sounds, we stress the importance of using speech stimuli in future studies with the cortical auditory evoked potentials and the P3 cognitive auditory potential.

CONCLUSION

The consonant or vowel-related speech stimulus, must be considered in the analysis of the N2 component of the cortical auditory evoked potentials and the P3 cognitive auditory potential. This was not observed for the N1 and P2 components.

ACKNOWLEDGEMENTS

We would like to thank the Institutional Program of Scientific Initiation Scholarships (PIBIC) from the National Council for Scientific and Technological Development (CNPq) for their support in this study, under process # 110767/2005-5.

REFERENCES

1. Sousa LCA, Piza MRT, Alvarenga KF, Cóser PL. Potenciais Auditivos Evocados Corticais Relacionados a Eventos (P300). Em: Sousa LCA, Piza MRT, Alvarenga KF, Cóser PL. Eletrofisiologia da audição e emissões otoacústicas. 2ª ed. Ribeirão Preto: Novo Conceito; 2010. p.95-107.

2. Ethridge LE, Hamm JP, Shapiro JR, Summerfelt AT, Keedy SK, Stevens MC, et al. Neural activations during auditory oddball processing discriminating schizophrenia and psychotic bipolar disorder. Biol Psychiatry. 2012;72(9):766-74. http://dx.doi.org/10.1016/j.biopsych.2012.03.034 PMid:22572033

3. Gao Y, Raine A, Schug RA. P3 event-related potentials and childhood maltreatment in successful and unsuccessful psychopaths. Brain Cogn. 2011;77(2):176-82. http://dx.doi.org/10.1016/j.bandc.2011.06.010 PMid:21820788

4. Reis AC, Iório MC. P300 in subjects with hearing loss. Pró-Fono. 2007;19(1):113-22. PMid:17461354

5. Weber-Fox C, Leonard LB, Wray AH, Tomblin JB. Electrophysiological correlates of rapid auditory and linguistic processing in adolescents with specific language impairment. Brain Lang. 2010;115(3):162-81. http://dx.doi.org/10.1016/j.bandl.2010.09.001 PMid:20889197

6. Wiemes GR, Kozlowski L, Mocellin M, Hamerschmidt R, Schuch LH. Cognitive evoked potentials and central auditory processing in children with reading and writing disorders. Braz J Otorhinolaryngol. 2012;78(3):91-7. http://dx.doi.org/10.1590/S1808-86942012000300016 PMid:22714853

7. Uppenkamp S, Johnsrude IS, Norris D, Marslen-Wilson W, Patterson RD. Locating the initial stages of speech-sound processing in human temporal cortex. Neuroimage. 2006;31(3):1284-96. http://dx.doi. org/10.1016/j.neuroimage.2006.01.004 PMid:16504540

8. Liebenthal E, Binder JR, Spitzer SM, Possing ET, Medler DA. Neural substrates of phonemic perception. Cereb Cortex. 2005;15(10):1621-31. http://dx.doi.org/10.1093/cercor/bhi040 PMid:15703256

9. Husain FT, Fromm SJ, Pursley RH, Hosey LA, Braun AR, Horwitz B. Neural bases of categorization of simple speech and nonspeech sounds. Hum Brain Mapp. 2006;27(8):636-51. http://dx.doi.org/10.1002/hbm.20207 PMid:16281285

10. Samson F, Zeffiro TA, Toussaint A, Belin P. Stimulus complexity and categorical effects in human auditory cortex: an activation likelihood estimation meta-analysis. Front Psychol. 2010;1:241. PMid:21833294

11. Henkin Y, Kileny PR, Hildesheimer M, Kishon-Rabin L. Phonetic processing in children with cochlear implants: an auditory event-related potentials study. Ear Hear. 2008;29(2):239-49. http://dx.doi.org/10.1097/AUD.0b013e3181645304 PMid:18595188

12. Martin BA, Tremblay KL, Korczak P. Speech evoked potentials: from the laboratory to the clinic. Ear Hear. 2008;29(3):285-313. http://dx.doi.org/10.1097/AUD.0b013e3181662c0e PMid:18453883

13. Sharma A, Kraus N, McGee TJ, Nicol TG. Developmental changes in P1 and N1 central auditory responses elicited by consonant-vowel syllables. Electroencephalogr Clin Neurophysiol. 1997;104(6):540-5. http://dx.doi.org/10.1016/S0168-5597(97)00050-6

14. Tampas JW, Harkrider AW, Hedrick MS. Neurophysiological indices of speech and nonspeech stimulus processing. J Speech Lang Hear Res. 2005;48(5):1147-64. http://dx.doi.org/10.1044/1092-4388(2005/081)

15. Gilley PM, Sharma A, Dorman M, Martin K. Developmental changes in refractoriness of the cortical auditory evoked potential. Clin Neurophysiol. 2005;116(3):648-57. http://dx.doi.org/10.1016/j.clinph.2004.09.009 PMid:15721079

16. Garinis AC, Cone-Wesson BK. Effects of stimulus level on cortical auditory event-related potentials evoked by speech. J Am Acad Audiol. 2007;18(2):107-16. http://dx.doi.org/10.3766/jaaa.18.2.3 PMid:17402297

17. Massa CG, Rabelo CM, Matas CG, Schochat E, Samelli AG. P300 with verbal and nonverbal stimuli in normal hearing adults. Braz J Otorhinolaryngol. 2011;77(6):686-90. PMid:22183272

18. Bennett KO, Billings CJ, Molis MR, Leek MR. Neural encoding and perception of speech signals in informational masking. Ear Hear. 2012;33(2):231-8. http://dx.doi.org/10.1097/AUD.0b013e31823173fd PMid:22367094

19. Sussman E, Steinschneider M, Gumenyuk V, Grushko J, Lawson K. The maturation of human evoked brain potentials to sounds presented at different stimulus rates. Hear Res. 2008;236(1-2):61-79. http://dx.doi.org/10.1016/j.heares.2007.12.001 PMid:18207681

20. Wunderlich JL, Cone-Wesson BK, Shepherd R. Maturation of the cortical auditory evoked potential in infants and young children. Hear Res. 2006;212(1-2):185-202. http://dx.doi.org/10.1016/j.heares.2005.11.010 PMid:16459037

21. Martin L, Barajas JJ, Fernandez R, Torres E. Auditory event-related potentials in well-characterized groups of children. Electroencephalogr Clin Neurophysiol. 1988;71(5):375-81. http://dx.doi.org/10.1016/0168-5597(88)90040-8

22. Duarte JL, Alvarenga Kde F, Banhara MR, Melo AD, Sás RM, Costa Filho OA. P300-long-latency auditory evoked potential in normal hearing subjects: simultaneous recording value in Fz and Cz. Braz J Otorhinolaryngol. 2009;75(2):231-6. PMid:19575109

23. Franco GM. The cognitive potential in normal adults. Arq Neuropsiquiatr. 2001;59(2-A):198-200. http://dx.doi.org/10.1590/S0004-282X2001000200008 PMid:11400024

24. Novak GP, Ritter W, Vaughan HG Jr, Wiznitzer ML. Differentiation of negative event-related potentials in an auditory discrimination task. Electroencephalogr Clin Neurophysiol. 1990;75(4):255-75. http://dx.doi.org/10.1016/0013-4694(90)90105-S

25. Geal-Dor M, Kamenir Y, Babkoff H. Event related potentials (ERPs) and behavioral responses: comparison of tonal stimuli to speech stimuli in phonological and semantic tasks. J Basic Clin Physiol Pharmacol. 2005;16(2-3):139-55. http://dx.doi.org/10.1515/JBCPP.2005.16.2-3.139 PMid:16285466

26. Beynon AJ, Snik AF, Stegeman DF, van den Broek P. Discrimination of speech sound contrasts determined with behavioral tests and event-related potentials in cochlear implant recipientes. J Am Acad Audiol. 2005;16(1):42-53. http://dx.doi.org/10.3766/jaaa.16.1.5 PMid:15715067

27. Perez CA, Engineer CT, Jakkamsetti V, Carraway RS, Perry MS, Kilgard MP. Different timescales for the neural coding of consonant and vowel sounds. Cereb Cortex. 2013;23(3):670-83. http://dx.doi.org/10.1093/cercor/bhs045 PMid:22426334

28. Jäncke L, Wüstenberg T, Scheich H, Heinze HJ. Phonetic perception and the temporal cortex. Neuroimage. 2002;15(4):733-46. http://dx.doi.org/10.1006/nimg.2001.1027 PMid:11906217

29. Joanisse MF, Gati JS. Overlapping neural regions for processing rapid temporal cues in speech and nonspeech signals. Neuroimage. 2003;19(1):64-79. http://dx.doi.org/10.1016/S1053-8119(03)00046-6

1. PhD, Associate Professor - University of São Paulo; Associate Professor - Department of Speech and Hearing Therapy - School of Dentistry - University of São Paulo, Bauru campus, Brazil.

2. Speech and Hearing Therapist; MSc student in Sciences of the Processes and Communication Disorders - School of Dentistry of Bauru; University of São Paulo, Bauru campus, Brazil.

3. Speech and Hearing Therapist, Specialist in Family and Community Health - Federal University of São Carlos, São Carlos, São Paulo, Brazil).

4. PhD; Professor. Speech and Hearing Therapist - Cochlear Implant and Cleft Lip and Palate Program - Santo Antônio Hospital- Irmã Dulce social works, Salvador, Bahia, Brazil.

5. PhD, Associate Professor - University of São Paulo; Associate Professor - Department of Speech and Hearing Therapy - School of Dentistry - University of São Paulo, Bauru campus, Brazil.

University of São Paulo.

Send correspondence to:

Kátia de Freitas Alvarenga

Al. Dr. Octávio Pinheiro Brisola, nº 9-75

Bauru - SP. Brazil. CEP: 17012-901

E-mail: katialv@fob.usp.br

Paper submitted to the BJORL-SGP (Publishing Management System - Brazilian Journal of Otorhinolaryngology) on October 5, 2012; and accepted on January 19, 2013. cod. 10506.

Institutional Program of Scientific Initiation Scholarships (PIBIC) - National Council for Science and Technology Development (CNPq), under individual process: 110767/2005-5.